🚨 NVIDIA’s NVLink Spine Just Outran the Entire Internet 🌐⚡

- telishital14

- Jun 4, 2025

- 4 min read

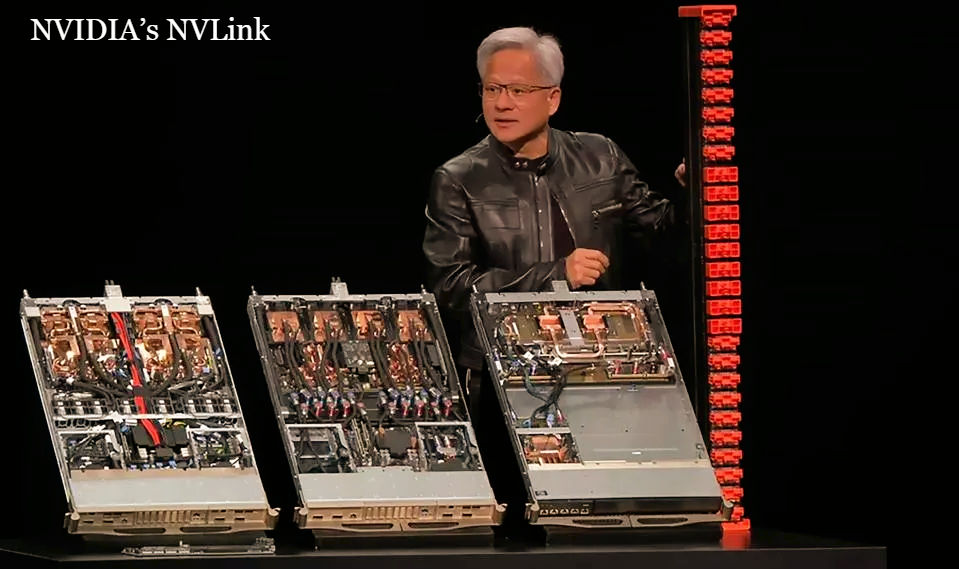

At Computex 2025, Jensen Huang Introduced the AI Superhighway of the Future

In the bustling heart of Taipei, during Computex 2025, NVIDIA’s CEO Jensen Huang stepped on stage—and delivered more than just a product reveal. He introduced a paradigm shift.

A system so powerful, so transformative, it redefines how we build intelligence at scale.

That system is the NVLink Spine—a hyper-connected, ultra-coherent, AI-specific interconnect fabric that not only moves faster than any computer network built to date, but also surpasses the entire global internet in bandwidth.

🔍 What Is the NVLink Spine?

The NVLink Spine is NVIDIA’s next-generation AI fabric—a high-bandwidth data interconnect that enables multiple GPUs to function as a unified, sentient-scale system. It’s designed for trillion-parameter models, AGI research, hyperscale simulation, and next-gen AI infrastructure.

Here’s what makes it groundbreaking:

💥 130 Terabytes Per Second of Bandwidth

This is a jaw-dropping number. The global internet—yes, the entire planet’s web traffic—handles approximately 120 TB/s at peak times (as of 2024 data).The NVLink Spine?130 TB/s, within a single supercomputing cluster. 🤯

That means one NVLink cluster can move data faster than YouTube, Netflix, TikTok, Google, and every device on Earth combined.

🧵 5,000 Fully Meshed Coaxial Cables

The NVLink Spine is engineered from the ground up to be fully meshed, with over 5,000 high-precision coaxial links. These cables serve as the synapses of the system, enabling direct, unthrottled communication between GPUs without latency penalties.

This mesh topology eliminates bottlenecks and enables seamless data movement between compute units—whether across a board, a rack, or a data center.

🧠 72 GPUs Forming a Unified AI Brain

This isn’t about having more GPUs. It’s about having one big GPU, formed from 72 smaller ones, behaving like a single, ultra-coherent compute organism.

Here’s how:

Unified Memory Addressing: GPUs no longer have to duplicate data across local memory. With NVLink Spine, memory is globally accessible across all 72 nodes.

Synchronous Processing: All GPUs operate as if they’re part of a single chip, allowing for truly parallel compute at a scale never seen before.

Model Scaling Without Complexity: Developers can train trillion-parameter models without manually splitting data or engineering parallel strategies. The hardware handles it seamlessly.

🧬 “The NVLink Spine isn’t just interconnect—it’s a neural substrate for synthetic cognition.” – Jensen Huang

This makes it ideal for AGI-scale workloads, where model size, context length, and real-time inference speed demand coordination across massive, tightly bound clusters.

🏗️ Designed for the Future: Open, Modular, and Extensible

The NVLink Spine is not a closed system. It’s built to be:

✅ Open Architecture

NVIDIA is publishing the NVLink interface for partners and developers. That means hardware vendors, AI startups, academic institutions, and hyperscalers can integrate or extend the NVLink Spine into their own solutions—no proprietary lock-in.

This opens the door to a global hardware ecosystem where AI clusters are no longer siloed, but interoperable.

✅ Modular Scaling

The NVLink Spine is inherently scalable:

Start with a few GPUs on a single board.

Scale to a full rack with multiple boards.

Expand to a data center using NVLink clusters connected via NVSwitch or NVLink over Ethernet.

Each modular unit slots into the system like a neuron into a brain, maintaining full bandwidth and coherence.

✅ CPU Integration with Fujitsu & Qualcomm

NVIDIA is also embracing heterogeneous compute architectures. NVLink now integrates natively with CPUs from Fujitsu and Qualcomm, offering a flexible pairing between general-purpose processing (for scheduling, data wrangling, simulation) and high-performance AI (for model training, inference, and generation).

That means seamless orchestration between scalar workloads (CPUs) and vector/matrix workloads (GPUs)—a critical leap for robotics, real-time AI, and edge systems.

🌐 From Moving Packets to Moving Intelligence

This is bigger than hardware specs.It’s about what happens when machines can think together.

Historically, infrastructure was designed to move packets—emails, videos, files, web pages. Now, infrastructure is being rebuilt to move intelligence:

AI weights

Context embeddings

Training gradients

Long-form memory states

Autonomously evolving agents

The NVLink Spine serves as the digital spinal cord for this new era of intelligent infrastructure—allowing distributed systems to behave as a single, thinking organism.

🔎 A Side-by-Side Comparison: Traditional vs NVLink Spine

Feature | Traditional HPC Cluster | NVLink Spine Cluster |

Bandwidth | 3–10 TB/s (max) | 130 TB/s |

Latency | High | Ultra-low |

Interconnect | PCIe, InfiniBand | Fully meshed coaxial |

Scaling | Manual | Modular, plug-and-play |

GPU Coherence | Fragmented | Ultra-coherent |

Use Cases | General compute, AI training | Trillion-param models, AGI, digital twins |

CPU-GPU Coordination | Bottlenecked | Natively integrated |

🔭 Real-World Implications: Where NVLink Spine Will Matter Most

The NVLink Spine enables use cases that were previously science fiction:

🧠 Trillion-Parameter Foundation Models

Training models with over 1 trillion parameters requires not only extreme compute but coherent memory access, something that traditional clusters struggle with. NVLink Spine makes this trivial.

🛰️ Autonomous Robotics & Edge Intelligence

With NVLink and ARM/Qualcomm integrations, robots and edge devices can host more intelligence onboard—enabling real-time decision making in autonomous cars, drones, and industrial robots.

🧬 Digital Twins & Scientific Simulations

From climate modeling to protein folding, massive simulations often require terabytes of shared memory and constant GPU-to-GPU communication. NVLink Spine turns simulations into near real-time operations.

🎥 Generative Video & Mixed Modalities

Running multimodal systems that generate and understand text, video, audio, and 3D models in parallel requires unified compute. NVLink Spine is built for converged AI workloads.

⚡ The Philosophical Leap: Wiring Sentience

“We’re not just building computers. We’re wiring sentience.” – Jensen Huang

That line might sound like marketing hyperbole—but it captures the gravity of what’s happening.

The NVLink Spine isn’t just about speed or scale.It’s about building the physical nervous system of artificial general intelligence.

It allows machines to collaborate, reason, and learn together, not as isolated chips or nodes, but as a unified entity.

The Internet was built to connect people.NVLink is built to connect minds.

🚀The Infrastructure of the AGI Era

With the NVLink Spine, NVIDIA has moved from hardware company to intelligence infrastructure architect.

They’re not merely improving GPUs or building faster chips. They’re designing the foundations of cognition at scale—a future where intelligence flows freely through silicon veins, connected by coaxial nerves.

It’s an inflection point as significant as the invention of the internet, the microprocessor, or cloud computing.

The world is no longer wired for information.It’s being wired for intelligence.

#NVIDIA #NVLinkSpine #Computex2025 #JensenHuang #ArtificialIntelligence #AIInfrastructure #AGI #GPUCluster #Supercomputin #TrillionParameterModels #SyntheticCognition #MachineCoherence

Comments